Why the US regulatory playbook matters for lenders everywhere - and what it means for your institution

From CFPB enforcement to EU AI Act compliance, regulators worldwide are converging on the same fundamental questions: Can you explain your AI? Can you prove it's fair? Can you control it? This regulatory convergence creates both risk and opportunity for financial institutions globally. If you can't explain, audit, and justify your model—regulators will. This is your wake-up call.

Executive Summary

At HES FinTech, we've implemented AI lending solutions across 30+ countries and witnessed a fundamental shift in how regulators approach algorithmic decision-making. Early regulatory explorations in major financial markets have evolved into a global regulatory convergence, with enforcement actions like the $89 million in US penalties setting the tone worldwide.

The regulatory landscape worldwide has crystallized around three non-negotiable principles:

1. AI systems receive no special treatment under consumer protection laws globally. From the CFPB's position in the US to the EU AI Act requirements, the message is unambiguous: if you use algorithms to make credit decisions, you must explain them in plain language. "The algorithm decided" is no longer legally defensible anywhere.

2. Choosing to deploy AI creates legal liability across jurisdictions. Courts in multiple countries have ruled that the decision to use algorithmic tools constitutes a policy choice that can trigger disparate impact violations—even when the algorithm produces accurate results.

3. The compliance bar has been raised permanently worldwide. Generic adverse action notices violate regulations globally. Fair lending testing must be ongoing, not periodic. Model documentation must meet banking standards regardless of charter type or geography.

Our experience implementing compliant AI systems across diverse regulatory environments reveals a counterintuitive truth: institutions that build transparency and fairness into their AI architecture from inception move faster to market and scale more successfully than those who treat compliance as an afterthought. The Apple Card controversy demonstrates that technical sophistication without regulatory alignment creates existential business risk—a lesson now being heeded by financial institutions worldwide.

The global market opportunity remains extraordinary—AI lending will reach $2.01 trillion by 2037.

However, success now requires mastery of both technological innovation and regulatory compliance across multiple jurisdictions. The institutions that recognize this dual requirement will define the next generation of global financial services.

This paper distills our practical experience helping lenders navigate this complex terrain across 30+ countries and our commitment to advancing responsible AI in financial services. While we champion innovation, we believe sustainable success comes from building AI systems that are transparent, fair, and compliant from their foundation.

01. The Global Convergence: Why AI Lending Challenges Are Universal

While our detailed analysis focuses on the US market, these challenges mirror concerns emerging across all major financial jurisdictions. From the EU's AI Act to Singapore's MAS guidelines, regulators worldwide are asking identical questions about AI in lending.

Three Universal Problems Every Global Lender Faces:

1. The Explainability Gap

- US: CFPB demands specific, behavioral reasons for credit denials

- EU: AI Act requires transparency for high-risk AI systems (including credit scoring)

- UK: FCA Consumer Duty obligations demand clear customer communications

- Singapore: MAS emphasizes transparency and explainability in AI governance frameworks

- Universal Reality: "The algorithm decided" no longer works as an explanation anywhere

2. The Fairness Test

- US: Disparate impact analysis under ECOA

- EU: Fundamental rights compliance under AI Act

- UK: Consumer Duty's good outcomes requirement

- Canada: Human Rights Act applications to algorithmic decisions

- Universal Question: Can your AI prove it's fair across protected characteristics?

3. The Control Paradox

- Every jurisdiction wants: Fast, efficient AI-driven lending decisions

- Every jurisdiction fears: Loss of human oversight and accountability

- Every regulator demands: Governance frameworks that ensure control

- Universal Dilemma: How do you innovate with AI while maintaining regulatory control?

The Bottom Line: The US regulatory framework we examine isn't uniquely American—it's the blueprint for global AI governance in financial services. Today's CFPB enforcement becomes tomorrow's global standard.

02. Current State of AI in Global Lending Markets

Market Growth and Adoption

The convergence of artificial intelligence and lending is fundamentally restructuring financial services globally. At HES FinTech, our analysis across 30+ countries shows this isn't just growth—it's a complete transformation of how lending operates worldwide. Early adopters stand to gain significant advantages while traditional institutions risk obsolescence.

- Global Digital Lending Platforms: $507.27 billion in 2025, growing at 11.90% CAGR to reach $889.99 billion by 2030, per Mordor Intelligence's global digital lending market analysis (source)

- AI Platform Lending Market: $109.73 billion in 2024 growing to $158.22 billion in 2025, ultimately reaching $2.01 trillion by 2037 at 25.1% CAGR, according to Research Nester's analysis of the global AI platform lending market (source)

- 73% of US mortgage lenders cite operational efficiency improvement as primary motivation for AI adoption (source)

- 85% of banks globally use AI to automate lending processes, based on Market.us global banking survey (source)

- 75% of UK financial services firms already using AI, with another 10% planning adoption within 3 years, up from 58% in 2022 (source)

- Generative AI could deliver $200-340 billion in additional annual value to the banking industry through increased productivity, equivalent to 9-15% of operating profits (source)

Performance Metrics - Real Cases

Our client implementations have consistently demonstrated measurable impact from AI-driven automated planning—one of the key features in the overall digital transformation process. These outcomes are not isolated; they are further supported by industry research and benchmarks.

- Up to 20× faster loan processing — reducing time-to-decision from 20–30 days to just 2–24 hours

- 3× improvement in credit scoring accuracy — enabling more precise and data-driven risk assessments

- 25% average reduction in default rates — driven by improved modeling and alternative data integration

- 50%–90% reduction in decision-making time — through automated and hybrid underwriting models

- Up to 90% of lending workflows fully automated — from application intake to servicing and collections

Critical Challenges Identified by Industry Research

Industry studies globally consistently highlight major barriers to successful AI adoption—from talent gaps to legacy systems and regulatory complexity.

- Global Talent Shortage: The data science field continues to face significant shortages worldwide, with the U.S. Bureau of Labor Statistics projecting 36% growth in data scientist employment from 2023 to 2033, while similar shortages exist across Europe and Asia. (source)

- Implementation challenges: The survey reveals that companies face numerous challenges when implementing AI initiatives, with around 70% stemming from people- and process-related issues, 20% attributed to technology problems, and only 10% involving AI algorithms. 74% of companies struggle to achieve and scale value from AI (source)

- Legacy Systems: As 59% of institutions already process sensitive banking data in the cloud, traditional lenders still managing regulated information on-premises face mounting pressure to modernize or risk losing market share to AI-enabled competitors (source)

- Regulatory Compliance: Financial institutions worldwide are cautious about gen AI due to various reasons, including concerns about customer acceptance and impact; overreliance on third-party model providers; and regulatory uncertainty (source). The largest perceived regulatory constraint to the use of AI globally is data protection and privacy, followed by resilience, cybersecurity and third-party rules (source)

03. Comprehensive Regulatory Framework: Federal, State, and Global Standards

The Legal Foundation: ECOA and Beyond

The regulatory landscape for AI-powered lending in the United States rests on decades-old civil rights legislation that has proven remarkably adaptable to new technologies. At its core, the Equal Credit Opportunity Act (ECOA) and its implementing Regulation B establish fundamental requirements that every lender must follow, regardless of whether they use pencil-and-paper calculations or sophisticated machine learning algorithms.

ECOA Summary:

- Name: Equal Credit Opportunity Act (15 U.S.C. §1691 et seq.);

- Key Requirements: Prohibits credit discrimination; requires specific reasons for credit denials; applies to all credit decisions regardless of technology used (source)

The Evolution of CFPB Enforcement

The Consumer Financial Protection Bureau has progressively sharpened its focus on algorithmic lending, transforming broad principles into specific technical requirements. This evolution reflects a growing sophistication in understanding how AI can perpetuate or mask discrimination.

This evolution reflects a growing global sophistication in understanding how AI can perpetuate or mask discrimination.

In their pivotal August 2024 comment to the Treasury Department, the CFPB made their position crystal clear: "There are no exceptions to the federal consumer financial protection laws for new technologies." This statement effectively closed any perceived loopholes that fintech companies might have hoped existed for AI-driven lending.

More significantly, the Bureau noted that "Courts have already held that an institution's decision to use algorithmic, machine-learning or other types of automated decision-making tools can itself be a policy that produces bias under the disparate impact theory of liability." This principle is now being adopted by regulators worldwide, from the EU to Singapore.

CFPB AI Guidance (2024) Summary:

- Name: CFPB Comment on Treasury RFI on AI in Financial Services;

- Key Requirements: No technology exceptions; AI use can create disparate impact liability; requires robust fair lending testing and searches for less discriminatory alternatives (source)

From Generic to Specific: The New Standard for Explanations

The CFPB's enforcement has evolved from accepting generic explanations to demanding behavioral specificity—a trend now emerging globally. Under current guidance, telling a borrower they were denied due to "purchasing history" is insufficient and non-compliant. Instead, lenders must provide concrete details such as "Multiple cash advances exceeding 30% of income in past 60 days."

This shift, formalized in CFPB Circular 2023-03, explicitly states that "Creditors cannot state reasons for adverse actions by pointing to broad buckets." The days of checking boxes on pre-printed forms are over—each denial must include individualized, specific reasons that directly relate to the applicant's actual financial behavior.

CFPB Circular 2023-03 Summary:

- Name: Adverse action notification requirements and the proper use of the CFPB's sample forms;

- Key Requirements: Cannot use generic reasons; must provide specific behavioral details; sample forms insufficient if they don't reflect actual reasons (source)

Technical Compliance in the AI Age

Meeting regulatory requirements in the era of machine learning requires sophisticated technical solutions globally. Regulators worldwide expect lenders to make "black-box" models transparent, which necessitates advanced interpretability techniques:

Use explainable AI techniques like LIME or SHAP to break down individual lending decisions into understandable components, showing exactly how each factor contributed to the final decision.

Technical Requirements Summary:

- Requirement: Explainable AI for credit decisions

- Methods: LIME, SHAP, or equivalent interpretability techniques

- Documentation: Real-time recording of decision factors and weights

- Purpose: Enable specific adverse action notices as required by ECOA

The Ongoing Obligation: Fair Lending Testing

Regardless of political changes or jurisdictional differences, fair lending testing requirements remain in force globally. "Robust fair lending testing regimes" must include:

- Regular disparate impact analysis

- Searches for less discriminatory alternatives (LDAs)

- Both manual and automated testing techniques

- Complete documentation of methodologies and results

Fair Lending Testing Requirements:

- Frequency: Ongoing/regular basis;

- Scope: All credit decision models including AI;

- Methods: Disparate impact analysis; LDA searches; statistical testing;

- Documentation: Complete audit trail of testing and results;

- Legal Basis: ECOA and Fair Housing Act

Federal Risk Management Standards

Financial institutions using AI in lending must navigate complex regulatory webs beyond consumer protection requirements. These standards, primarily centered around the landmark SR 11-7 guidance, ensure that AI implementation remains safe, sound, and fair across all banking operations globally.

The Foundation: SR 11-7 Model Risk Management (Supervisory Guidance on Model Risk Management)

In April 2011, the Federal Reserve and OCC jointly issued what would become the cornerstone of AI governance in banking: Supervisory Guidance on Model Risk Management, known as SR 11-7. This guidance, later adopted by the FDIC in 2017, defines standards that are now being replicated by banking regulators worldwide.

The guidance establishes that model risk increases with complexity, uncertainty, breadth of use, and potential impact—all characteristics inherent in modern AI systems. At its core, SR 11-7 requires three fundamental safeguards that are becoming global standards: independent validation by objective parties, ongoing monitoring comparing outputs to actual outcomes, and documentation detailed enough that unfamiliar parties can understand the model's operation.

Understanding SR 11-7 Documentation Requirements in Practice

These requirements are becoming the global gold standard for AI governance. Your AI models need documentation that enables independent validation and ongoing risk management across three core areas:

- Model Development Documentation must cover business purpose, technical architecture, data sources, and performance metrics.

- Validation Reports require replication testing, benchmarking analysis, and sensitivity testing to prove the model works as intended.

- Ongoing Monitoring tracks performance trends, population stability, and business impact metrics like loss rates and fairness indicators.

Key insight from our global client implementations: treat documentation as living risk management tools, not compliance paperwork. If your documentation can't help someone else understand and validate your model, it fails modern regulatory standards worldwide.

Third-Party AI: Interagency Guidance on Third-Party Relationships

The explosion of third-party AI vendors prompted regulators globally to issue comprehensive oversight requirements. The US Interagency Guidance on Third-Party Relationships: Risk Management from June 2023 recognizes that whether developed internally or purchased from vendors, AI models pose similar risks requiring consistent oversight.

According to emerging global guidance, contracts with AI vendors must include provisions for:

- Audit rights

- Measurable performance standards

- Data security requirements

- Liability clauses

- Termination rights

Critically, regulators emphasize that institutions cannot outsource accountability. If a vendor's model is too opaque to validate, the institution must demand transparency or use alternatives.

SOC Reports: The Gold Standard for AI Vendor Assurance

SOC (Service Organization Control) reports have become the global practical standard for demonstrating vendor compliance. Financial institutions worldwide increasingly require these independent assessments before trusting their lending decisions to third-party AI.

SOC 2 Type II (baseline for AI vendors):

- Covers 5 trust principles: Security, Availability, Processing Integrity, Confidentiality, Privacy

- Must be current (within 6 months)

- Must include AI-specific controls (bias testing, versioning, explainability)

A clean SOC report doesn't guarantee a good AI model—but a bad one guarantees operational problems globally.

The State Regulatory Patchwork

While federal rules set the baseline in the US, similar state-level initiatives worldwide are pushing ahead with their own AI-related laws—often stricter and more targeted.

AI-Specific Algorithmic Accountability Laws

- NYC Local Law 144: Requires annual bias audits for automated employment decision tools. Similar auditing requirements are emerging in EU member states.

- Colorado SB21-169: Forces insurers to test AI for discriminatory outcomes and document system behavior. This framework is being replicated for lending in other jurisdictions.

Data Privacy and Consumer Rights

- California Privacy Rights Act (CPRA): Gives consumers the right to opt out of automated decisions. Similar rights exist under GDPR and are spreading globally.

- Illinois BIPA: Strictly limits biometric data use. Similar restrictions exist across the EU and emerging in Asia-Pacific.

Fair Lending and Anti-Discrimination Laws

Multiple US states have expanded anti-discrimination protections beyond federal law—a trend being replicated globally:

- California Unruh Civil Rights Act

- New York Fair Lending Law

- Massachusetts expanded CRA requirements

Global Standards Shaping Worldwide Compliance

NIST AI Risk Management Framework (2023)

Still widely used despite political changes and adopted by institutions globally. Built around four practical functions:

- Govern — Integrate AI oversight into company culture and accountability.

- Map — Know what AI systems you use and how they affect customers.

- Measure — Continuously track fairness, accuracy, and real-world impact.

- Manage — Act on what you find; prioritize risks, fix problems, communicate clearly.

Its strength is flexibility—it aligns with existing risk management systems globally and doesn't require a complete overhaul.

EU AI Act (Effective Aug 2024)

- Labels credit scoring as a "high-risk" AI use case.

- Requires strong risk controls, explainability, and human oversight.

- Global banks with EU operations are already complying, creating pressure for worldwide adoption

ISO/IEC Standards

Gaining traction in regulatory discussions worldwide:

- 23053 — Framework for developing ML-based AI systems.

- 23894 — End-to-end risk management for AI.

- 38507 — Governance guidance for executives and boards.

These are gaining traction in regulatory discussions worldwide.

Basel Committee Guidance

- Operational Resilience Principles (BCBS 516): Treats AI as critical infrastructure. Emphasizes stress testing, governance, and risk resilience.

- AI/ML Newsletter (Dec 2022): Sets expectations for explainability, model governance, and global regulatory cooperation.

04. Critical Implementation Pitfalls and CFPB Enforcement

The Apple Card Case Study: A Global Cautionary Tale

In November 2019, a Twitter thread shook the global financial technology world and ultimately reshaped how the industry thinks about algorithmic transparency in lending worldwide. Danish entrepreneur David Heinemeier Hansson discovered that despite sharing finances with his wife and her having a higher credit score, Apple Card's algorithm had granted him a credit limit 20 times higher than hers. His public outcry quickly gained global momentum when Apple co-founder Steve Wozniak confirmed experiencing a similar issue.

The controversy escalated rapidly, demonstrating how AI lending issues can become global reputation crises. Within days, the New York State Department of Financial Services launched an investigation into Goldman Sachs, Apple Card's banking partner. Superintendent Linda Lacewell made the department's position clear: "Whether the intent is there or not, disparate impact is illegal" and "There is no such thing as, 'the company didn't do it, the algorithm did.'"

The case exposed critical gaps in how financial institutions globally deploy AI systems. While Goldman Sachs' algorithms were technically compliant with fair lending laws, they failed the public transparency test. The inability to provide clear explanations for credit decisions—even when those decisions were legally sound—created a perfect storm of public outrage, regulatory scrutiny, and reputational damage that financial institutions worldwide took note of.

The story didn't end with the bias investigation. In October 2024, the Consumer Financial Protection Bureau fined Apple $25 million and Goldman Sachs $45 million for separate Apple Card failures, demonstrating how algorithmic transparency issues can be symptomatic of broader operational challenges that regulators worldwide now scrutinize.

Key Global Lessons from the Apple Card Case:

- Technical Compliance Alone Is Insufficient Worldwide: Meeting legal requirements for fair lending doesn't guarantee public acceptance or regulatory approval globally. Transparency and explainability are equally critical.

- Customer Service Must Understand AI Decisions Everywhere: Front-line staff globally need to be equipped with clear, understandable explanations for AI-driven decisions. "The algorithm decided" is never an acceptable answer.

- Perception Can Trigger Global Regulatory Action: Even when algorithms are unbiased, the appearance of discrimination can lead to costly investigations and lasting reputational damage across multiple jurisdictions.

- Explainability Is Non-Negotiable Globally: Financial institutions worldwide must be able to clearly articulate how their AI systems make decisions, especially when those decisions significantly impact customers' financial lives.

Global Lessons: International AI Enforcement Cases

While the Apple Card case dominated headlines in the US, similar algorithmic failures have emerged worldwide, demonstrating that AI transparency and fairness issues transcend borders.

Australia's Robodebt: The $1.8 Billion Automated Disaster (2016-2020)

Australia's Robodebt scheme stands as one of the most catastrophic examples of algorithmic decision-making failure globally. Implemented by the Australian government in 2016, this automated debt recovery system was designed to identify welfare overpayments by cross-referencing annual tax data with fortnightly benefit payments (Royal Commission into the Robodebt Scheme, Final Report, July 2023).

The algorithmic flaw was fundamental: the system used "income averaging"—dividing annual income equally across fortnightly periods—to determine if welfare recipients had been overpaid. This completely ignored the reality of casual and part-time work where income varies significantly between pay periods (University of Melbourne, "The flawed algorithm at the heart of Robodebt," July 2024).

The human cost was devastating. The system wrongly accused approximately 400,000 Australians of owing money to the government, with many receiving debt notices for thousands of dollars they didn't actually owe. Most tragically, the Royal Commission heard testimony linking multiple suicides to the stress and stigma of receiving these automated debt notices (Royal Commission Final Report, Chapter 15). The system also issued debt notices to deceased people and targeted the most vulnerable members of society.

The financial toll was staggering: In 2020, the government was forced to settle a class-action lawsuit for $1.8 billion and repay $746 million in wrongfully collected debts (Gordon Legal, Robodebt Class Action Settlement, 2020). The Royal Commission's final report in 2023 condemned the scheme as "a massive failure of public administration" and referred several officials for potential criminal charges (Royal Commission Final Report, Executive Summary).

Key lessons for global lenders:

- Algorithmic simplicity doesn't guarantee safety - even basic automated systems can cause massive harm when poorly designed

- The burden of proof matters - Robodebt reversed the normal burden of proof, requiring citizens to prove they didn't owe money rather than the government proving they did

- Vulnerable populations suffer most - automated systems often disproportionately impact those least able to defend themselves

- "Automation without human oversight" is a recipe for disaster - the system removed human review from what should have been complex individual assessments

Netherlands SyRI: AI Discrimination Ruled Illegal (2014-2020)

In February 2020, a Dutch court delivered a landmark ruling that stopped the government's SyRI (System Risk Indication) algorithm, marking one of the first times globally that a court banned an AI system on human rights grounds (District Court of The Hague, NJCM et al. v The State of the Netherlands, February 5, 2020).

SyRI was designed to detect welfare and tax fraud by combining data from multiple government databases and using algorithms to identify citizens with "increased risk" of committing fraud. The system analyzed everything from income and employment records to energy consumption and housing data (AlgorithmWatch, "How Dutch activists got an invasive fraud detection algorithm banned," 2020).

The discrimination was systematic: SyRI was deployed exclusively in low-income neighborhoods with high immigrant populations. As UN Special Rapporteur on extreme poverty Philip Alston noted in his submission to the court, "Whole neighborhoods are deemed suspect and are made subject to special scrutiny... while no such scrutiny is applied to those living in better off areas" (UN Special Rapporteur Letter to Dutch Court, September 26, 2019).

The court's ruling was groundbreaking: The Hague District Court found that SyRI violated Article 8 of the European Convention on Human Rights (right to private life) because (Court Judgment, paragraphs 6.81-6.95):

- The system lacked transparency - citizens had no way to know why they were flagged or challenge their risk scores

- The algorithm was completely opaque - even the court couldn't determine how the system actually worked

- The risk of discrimination was unacceptable - with no safeguards against bias or stereotyping effects

- The interference with privacy rights was disproportionate to the goal of fraud detection

Perhaps most damning: SyRI was spectacularly ineffective. Investigations by Dutch media revealed that in its years of operation, the system had failed to detect a single case of fraud despite millions invested in its development and deployment.

Global implications for AI lenders:

- Transparency is a legal requirement, not a nice-to-have - courts worldwide are demanding explainability in algorithmic systems

- Targeting specific populations creates legal liability - algorithms that disproportionately impact protected groups face human rights challenges

- Effectiveness matters for legal justification - systems that don't work cannot justify their intrusion on privacy rights

- The "black box" defense no longer works - courts expect institutions to explain how their algorithms make decisions

The Universal Pattern Emerges

These international cases reveal identical patterns to US enforcement actions:

- Promise-Performance Gaps: Robodebt promised efficient fraud detection but delivered massive errors. SyRI promised sophisticated risk assessment but caught no fraudsters.

- Vulnerable Population Impact: Both systems disproportionately harmed society's most vulnerable - welfare recipients, immigrants, and the poor.

- Transparency Failures: Neither system could adequately explain its decisions to affected individuals or even to courts and regulators.

- Accountability Gaps: In both cases, automated systems were deployed without sufficient human oversight or appeal mechanisms.

The message for global financial institutions is clear: algorithmic decision-making failures are not limited to any single country or regulatory framework. Courts worldwide are establishing that AI systems must be transparent, fair, and accountable - regardless of their technical sophistication.

05. Fraud and Security Challenges: Alarming Global Statistics

The financial services industry globally stands at a critical juncture where the same artificial intelligence technology that promises to revolutionize banking is being weaponized by sophisticated criminals worldwide. The numbers paint an alarming picture of this rapidly evolving global battleground.

- 70% of 600 surveyed fraud-management, AML, and compliance professionals globally believe that criminals are more advanced in leveraging AI than banks are in countering it, according to BioCatch's 2024 AI, Fraud, and Financial Crime Survey. Around half report a rise in financial crime and expect further increases in 2024. Generative AI enables more convincing scams globally, from deepfake videos to synthetic identities.

- 80% of 800 banking executives across 17 countries said criminals are more sophisticated in laundering money than institutions are at detecting it, according to the 2025 BioCatch Dark Economy Report. Moreover, 83% acknowledged a strong connection between fraud and broader crimes like drug and human trafficking.

- $40 billion in the U.S. by 2027, up from $12.3 billion in 2023—a 32% CAGR—is what AI-driven fraud could reach according to Deloitte's 2024 Financial Services Outlook. Similar growth rates are observed globally, with European and Asian markets showing comparable increases.

- 2 million money mule accounts identified across 257 FIs in 21 countries—likely a small portion of the global total, given the scale of criminal operations across the world's 44,000 banks—was reported by BioCatch in January 2025.

- 91% of institutions globally are now rethinking voice-verification due to AI voice cloning according to BioCatch's survey. 95% of synthetic identities fail to be detected by traditional fraud models globally.

- 84% of banking leaders worldwide agree the fight against the "Dark Economy" is critical, and most are increasing investments in countermeasures. 89% say more regulatory coordination is essential globally.

Learning from Enforcement: The Upstart Precedent

While the Apple Card case dominated global headlines, the regulatory playbook for AI lending was actually written years earlier through a quieter but more instructive case that's now influencing global regulatory approaches: Upstart Network's pioneering no-action letter.

In 2017, the CFPB issued its first no-action letter to Upstart Network, marking a watershed moment for AI in lending globally. The letter wasn't just permission to use alternative data—it was a detailed blueprint for how regulators worldwide now expect AI models to be governed.

The company committed to operating under unprecedented transparency requirements that have become the global gold standard for the industry. They documented their underwriting model with surgical precision, creating audit trails that could withstand regulatory scrutiny. Most significantly, Upstart agreed to continuous monitoring of how alternative data influenced credit decisions—effectively creating the first real-world laboratory for fair AI lending.

The transparency measures Upstart pioneered became particularly influential globally. The company developed clear explanations for how alternative data—from education history to employment patterns—impacted lending decisions. This wasn't just regulatory theater; it was practical customer service that helped borrowers understand and improve their creditworthiness.

Sharp Global Shift

But the story took a sharp turn in 2022 that sent shockwaves through the global fintech community. When Upstart requested significant changes to its model without CFPB review, the Bureau terminated the no-action letter, effectively ending the company's special regulatory status. The message was unmistakable globally: even companies with explicit regulatory approval couldn't operate AI systems without continuous oversight and approval for material changes.

The Upstart precedent reveals a fundamental truth about global AI regulation: special treatment comes with special obligations. For today's global lenders, this means building compliance architecture that can support not just current models, but future iterations and regulatory requirements across multiple jurisdictions.

The lasting global lesson is strategic: AI lending success requires regulatory partnership, not just technical compliance. Institutions that build transparent, well-documented systems from the start gain competitive advantages through faster approvals and deeper regulatory trust worldwide.

Recent CFPB Actions: The Patterns Emerge

While most enforcement actions don't explicitly target AI, several cases reveal how algorithmic systems amplify traditional compliance failures—creating new risks that global AI lenders must actively avoid.

Hello Digit (2022): When Algorithms Break Promises — $2.7M Fine

Hello Digit's case offers the clearest example of algorithmic enforcement in action. The fintech's automated savings algorithm was designed to help users save money without causing overdrafts—yet the CFPB found it repeatedly caused the exact problem it promised to prevent. Since 2017, the company had received nearly 70,000 overdraft reimbursement requests, demonstrating systematic algorithmic failure at scale.

The $2.7 million penalty wasn't just for the overdrafts—it was for the fundamental disconnect between marketing promises and algorithmic reality. Hello Digit advertised a "no overdraft guarantee" while deploying technology that couldn't deliver on that promise. The CFPB's message was unambiguous: if your algorithm can't fulfill your marketing claims, both the algorithm and the marketing are problematic.

Regions Bank (2022): When Systems Create Confusion — $191M Total

While not explicitly an AI case, Regions Bank's $191 million penalty illustrates how complex automated systems can create compliance nightmares. The bank's transaction processing systems created "complex and counter-intuitive practices" for overdraft fees that were so confusing even bank staff couldn't explain them to customers.

For AI lenders, the parallel is critical: if your staff can't explain how your algorithms work, you're facing the same risk Regions encountered. The CFPB expects institutions to understand and articulate their automated processes, regardless of underlying complexity.

The Global Enforcement Pattern Emerges

These US cases reveal three consistent failure modes that AI systems amplify—patterns now being recognized by regulators worldwide:

- Algorithmic Harm: When automated systems systematically damage consumers globally, regulators respond aggressively. Global AI lenders must ensure their models don't create unintended negative outcomes at scale.

- Explanation Failures: When institutions can't explain their automated processes globally, regulators view this as a fundamental governance breakdown. AI systems worldwide must be accompanied by clear explanation capabilities.

- Promise-Performance Gaps: When marketing claims exceed algorithmic capabilities globally, regulators treat this as deceptive practice. Global AI lenders must ensure their technology can deliver on every customer promise across all jurisdictions.

The lesson for global AI lenders is operational: automation doesn't reduce compliance obligations—it increases the speed and scale at which violations can occur across multiple jurisdictions simultaneously.

06. AI Lending Implementation: From Technical Pitfalls to Strategic Success

Successful AI lending requires mastering both technical excellence and regulatory compliance. This comprehensive guide distills our experience helping institutions navigate the complex terrain from initial implementation through long-term strategic success.

Technical Implementation Pitfalls

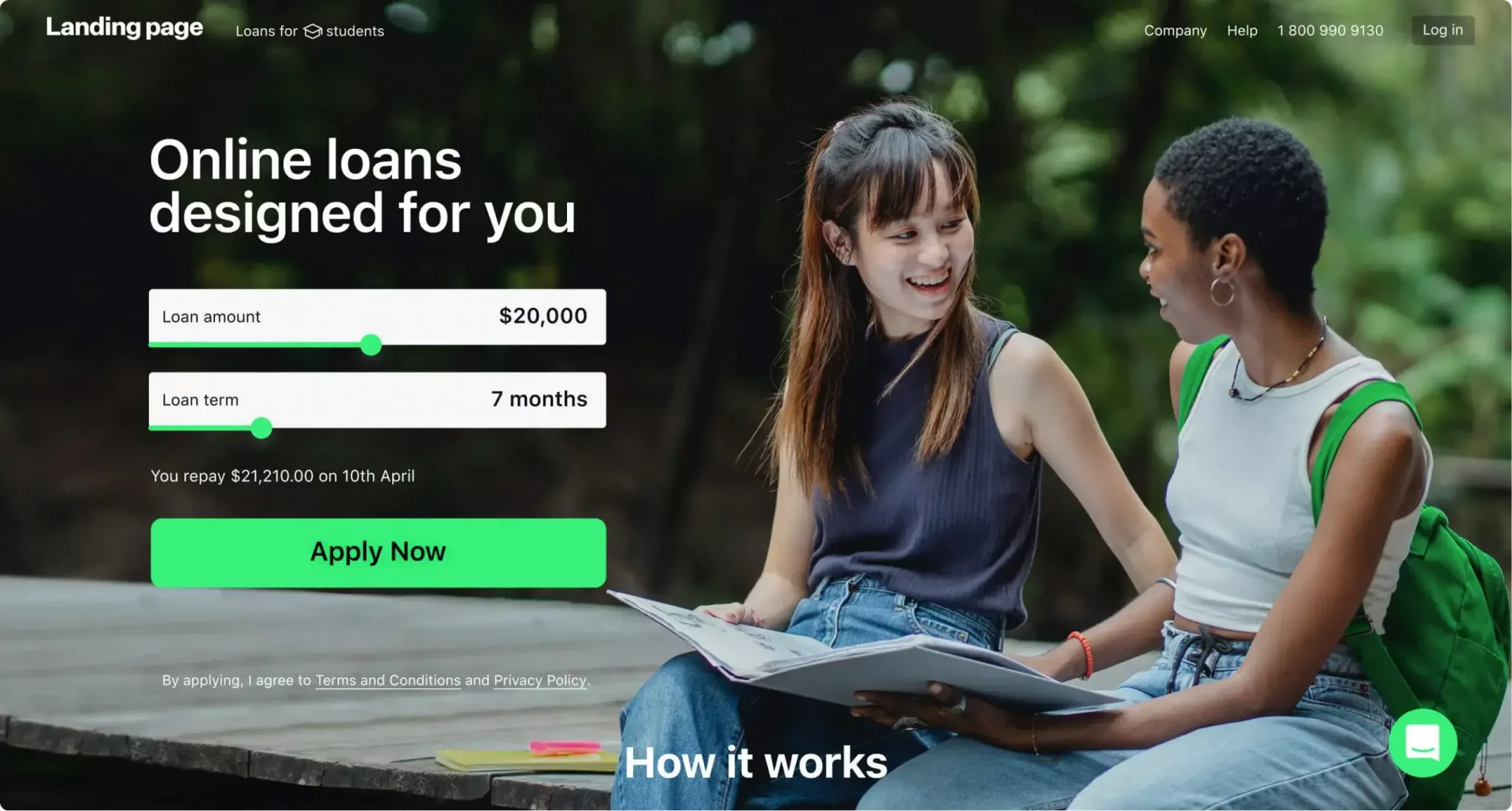

Alternative Data: Expanding Access, Increasing Risk

85% of banks globally use AI to automate lending processes, with many leveraging alternative data to reach underserved populations. Cash flow, rent payments, and utility history promise expanded credit access for thin-file borrowers, but create new compliance challenges.

What's generally acceptable: Income verification through bank account data, rental history, and utility bills align with fair lending principles and have received cautious support from regulators worldwide. These sources demonstrate financial stability through documented payment patterns.

High-risk signals: Social media activity, behavioral patterns like typing speed or device type, and educational prestige often serve as proxies for protected characteristics and can trigger disparate impact or redlining allegations.

To stay compliant:

- Focus on income stability, not spending habits.

- Avoid sensitive or inferred attributes (e.g. religion, politics, education costs).

- Justify every alternative data input with a documented business necessity and pre-launch bias testing.

- Ensure transparency—be ready to explain how the data influenced a decision.

Governance: Making AI Accountable

The difference between responsible and risky AI often comes down to governance—not just code. A strong global AI risk framework should follow a three-line defense model:

- Model Owners & Business Users — build and operate models, monitor performance, and log exceptions.

- Risk & Compliance Teams — independently validate models, test for bias, ensure policy alignment.

- Internal Audit — assess the entire framework, including vendor oversight and regulatory compliance.

Best practice: establish a Model Risk Committee with clear authority over approvals, performance reviews, exception handling, and sunset decisions. Without centralized oversight, governance gaps become compliance vulnerabilities.

Explainability: Transparency Is No Longer Optional

Lenders globally can no longer hide behind complexity. Regulators and consumers worldwide now expect clear explanations for AI-driven credit decisions.

Effective institutions combine:

- SHAP or LIME values to show which features most influenced a decision.

- Counterfactuals to tell borrowers what could have changed the outcome ("If your credit utilization were 15% lower, the result would differ.")

- Consistent, auditable explanation logic across decisions.

Explainability isn't just regulatory hygiene—it's critical to building consumer trust, defending models under scrutiny, and preventing reputational fallout.

Common Compliance Failures and Prevention

AI systems in lending often fail not because of intent, but because of overlooked basics. Based on real enforcement actions, here are ten common pitfalls—and how to prevent them:

- Generic Adverse Action Notices — Using boilerplate reasons violates regulations worldwide. Generate model-specific, applicant-level explanations.

- Poor Model Documentation — If your team changes, can someone else understand the model? Ensure full documentation of logic, data, and assumptions.

- No Fair Lending Testing — "Race-blind" ≠ fair. Perform regular disparate impact analysis across all protected classes—monthly for high-risk models.

- Vendor Blind Trust — "They said it's compliant" doesn't cut it. Demand validation reports, audit rights, and full transparency before production use.

- Risky Alternative Data — Avoid inputs that could create discrimination across different cultural and regulatory contexts.

- No Ongoing Monitoring — Models drift. Implement dashboards to track performance, fairness, and data stability continuously—not just during testing.

- Uncontrolled Manual Overrides — Human overrides can undo model fairness. Track them, require justification, and review for bias patterns.

- Lack of Governance — AI needs ownership. Create a Model Risk Committee with authority to approve, restrict, or retire models.

- Inadequate Consumer Communication — Customer service must explain AI decisions in plain language. Train staff and provide escalation scripts.

- Missing Regulatory Tracking — Regulations evolve quickly. Set up monthly update reviews with legal or compliance to stay current.

The Real Cost of Getting It Wrong

AI in lending offers speed, scale, and efficiency—but when compliance is overlooked, the consequences extend far beyond headline-grabbing fines. $89 million in penalties were levied against Apple and Goldman Sachs in 2024 for algorithmic discrimination concerns, but the hidden costs often dwarf regulatory penalties.

Financial Impact Beyond Fines

Legal defense and internal investigations globally typically cost 2-3 times the actual regulatory fine. IT and data remediation—including complete model rebuilds and new audit systems—can require millions in additional investment. Lost revenue during product freeze periods or paused rollouts creates opportunity costs that compound over time.

Operational Burden: The Long Tail

Regulatory enforcement rarely ends with a payment. Most consent orders require extensive, ongoing operational changes including independent monitors for 2-5 years, pre-approval requirements for any AI model changes, ongoing regulatory reporting obligations, customer remediation programs, and expanded compliance staffing.

These obligations stretch already thin teams and divert resources from innovation to compliance firefighting, creating competitive disadvantages that persist long after headlines fade.

Strategic and Reputational Consequences

Compliance failures ripple outward into capital markets, partnerships, and talent acquisition. Observable impacts include stock price declines and investor skepticism, increased cost of capital for lenders perceived as high-risk, delays or loss of strategic partnerships with fintechs and institutional investors, and hiring challenges in critical data science and compliance roles.

In a trust-driven business like lending, reputation isn't just brand equity—it's the foundation of customer acquisition and retention. AI-related compliance issues often become lightning rods for public criticism, amplified through social media and resulting in class action lawsuits and Congressional scrutiny.

07. Future Outlook and Strategic Recommendations

AI in lending isn't just evolving—it's accelerating. The institutions that succeed will be those who treat compliance not as a checkbox, but as a core design principle that enables sustainable innovation.

Where Regulation Is Headed

Over the next 12-24 months, expect material changes in AI governance worldwide. In the US, agencies are developing AI-specific guidance focused on explainability, bias testing, and governance requirements. Simultaneously, the EU AI Act, UK FCA guidelines, and emerging frameworks in Asia-Pacific are creating additional compliance layers that are influencing global standards.

State-level initiatives in the US, including California's extensive AI legislation package and emerging laws in other states. Even non-US lenders are beginning to align their systems to avoid future disruption as these standards become de facto global requirements.

The EU AI Act and Basel Committee principles are influencing global standards beyond their jurisdictions. Even institutions without European operations are beginning to align their systems to avoid future disruption.

Strategic Implementation Principles

- Make Explainability a Core Feature: Design models with transparency from inception rather than retrofitting explanation capabilities. Build internal tools for staff and external interfaces for customers that provide clear, understandable explanations for every decision.

- Design for Flexibility: Use modular, API-first architectures that enable rapid adaptation to regulatory changes. Cloud-native infrastructure provides the resilience and scalability necessary to handle evolving compliance requirements without fundamental system redesigns.

- Automate Compliance Where Possible: Manual compliance processes don't scale with AI-driven lending volumes. Build real-time bias monitoring, version-controlled model documentation, and automated audit trails directly into deployment pipelines.

- Embed Fairness and Inclusion: Regulators closely monitor AI's impact on underserved communities. Integrate fairness and inclusion metrics into model objectives—not just to pass audits, but to expand market reach responsibly and sustainably.

- Vet Partners Critically: Many compliance failures originate with "black box" third-party vendors. Whether evaluating credit decisioning engines or data sources, require explainability, comprehensive validation reports, and audit rights. Vendors who won't explain their systems create unacceptable compliance risks.

- Build Strategic Relationships: Collaborate proactively with regulators, academic researchers, and industry peers. Surround your organization with legal, compliance, and ethical AI expertise alongside engineering talent to ensure comprehensive risk management.

Why Compliance Drives Competitive Advantage

Strong AI compliance enables trust through clear, fair decision-making that reduces customer churn and builds loyalty. It provides market access by facilitating expansion across geographies and verticals without regulatory barriers. Clean documentation and validation workflows enable faster iteration cycles for model improvements and updates.

Most importantly, ethical institutions attract top AI and risk management professionals who want to work on responsible innovation rather than compliance firefighting.

At HES FinTech, we've helped clients deploy intelligent AI solutions that achieve full regulatory compliance across 30+ countries while unlocking competitive advantages. Our approach ensures that advanced machine learning capabilities work seamlessly within diverse regulatory frameworks, enabling institutions to leverage AI's transformative power without compromising compliance obligations anywhere they operate.

Our Take on the AI-Powered Lending

The journey toward AI-powered lending is both inevitable and transformative, yet success is far from guaranteed. At HES FinTech, our extensive experience implementing AI solutions across the lending ecosystem has reinforced a fundamental truth: the institutions that will thrive in this new era are those that embrace both innovation and responsibility with equal vigor.

The regulatory landscape we've explored—from evolving CFPB enforcement to state-level initiatives—represents not obstacles to innovation, but guardrails that protect both institutions and consumers. Our work with clients has consistently demonstrated that compliance-first approaches to AI implementation actually accelerate time to market and reduce long-term costs. The alternative—rushing to deploy AI without proper governance—invariably leads to the costly remediation efforts, regulatory penalties, and reputational damage we've documented throughout this paper.

What sets successful AI implementations apart is the recognition that technology alone is insufficient. The institutions we've helped achieve sustainable success share common characteristics: they invest in explainable AI architectures from the outset, they build robust governance frameworks before deploying their first model, and they view regulatory requirements as minimum standards rather than maximum goals. Most importantly, they understand that in lending—an industry built on trust—transparency and fairness aren't just compliance checkboxes but competitive advantages.

Conclusion

Looking ahead, we see tremendous opportunity for those willing to do the hard work of responsible AI implementation. The lending landscape of 2030 will be fundamentally different from today, with AI enabling financial inclusion at unprecedented scale while maintaining fairness and transparency. However, reaching this future requires navigating the complex terrain we've mapped in this paper.

At HES FinTech, we remain committed to helping financial institutions realize AI's transformative potential while avoiding the pitfalls that can derail even the most promising initiatives. Our message is clear: embrace AI's power, but respect its responsibilities. Build systems that are not just fast and accurate, but also fair and explainable. Invest in governance and compliance as enthusiastically as you invest in algorithms and infrastructure.

We invite you to join us in building this future—one where AI doesn't just make lending faster and more profitable, but fundamentally more fair and accessible. The journey may be complex, but with the right approach, partners, and commitment to responsible innovation, success is not just possible—it's inevitable.